All of a sudden, “curation” is one of the hottest words in the Web 2.0 world. That’s because it’s an idea that addresses a problem humans have never confronted before: too much information. In the process, it’s creating some compelling new ways to derive value from content.

All of a sudden, “curation” is one of the hottest words in the Web 2.0 world. That’s because it’s an idea that addresses a problem humans have never confronted before: too much information. In the process, it’s creating some compelling new ways to derive value from content.

Content curation is about filtering the stuff that people really need from out of all the noise around it. In the same way that museum curators choose which items from a collection to put on display, content curators select and publish information that’s of interest to a particular audience.

The problem with curation is that it’s labor-intensive. Someone has to sift through all that source information to decide what to keep. This task has never been easy to automate. Keyword filtering has all kinds of shortcoming and RSS feeds are little better than headline services.

I’ve recently been working with a startup that’s developed an innovative technology that vastly improves the speed and quality of content curation. CIThread has spent the last 15 months building an inference engine that uses artificial intelligence principles to give curators a kind of intelligent assistant. The company is attacking the labor problem by making curators more productive rather than trying to replace them.

Full disclosure: I have received a small equity stake and a referral incentive from CIThread as compensation for my advice. Other than that, my pay has amounted to a couple of free lunches. I just think these guys are onto something great.

CIThread (the name stands for “Collective Intelligence Threading” and yeah, they know they have to change it) essentially learns from choices that an editor or curator makes and applies that learning to delivering better source material. More wheat, less chaff.

The curator starts by presenting the engine with a basic set of keywords. CIThread scours the Web for relevant content, much like a search engine does. Then the curator combs through the results to make decisions about what to publish, what to promote and what to throw away.

As those decisions are made, the engine analyzes the content to identify patterns. It then applies that learning to delivering a better quality of source content. Connections to popular content management systems make it possible to automatically publish content to a website and even syndicate it to Twitter and Facebook without leaving the CIThread dashboard.

There’s intelligence on the front end, too. CIThread can also tie in to Web analytics engines to fold audience behavior into its decision-making. For example, it can analyze content that generates a lot of views or clicks and deliver more source material just like it to the curator. All of these factors can be weighted and varied via a dashboard.

Shhhhh!

CIThread is still pretty early stage. It has some early test customers, but none can be identified just yet. I’ll describe generally what one of them is doing, though.

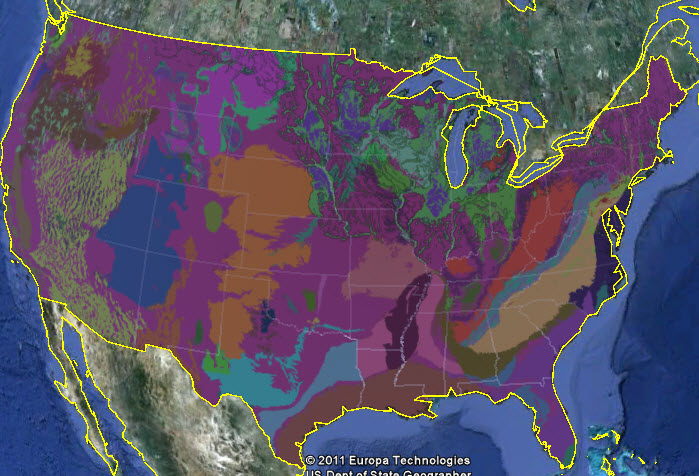

This company owns a portfolio of properties throughout the US and uses localized websites as both a marketing and customer service tool. Each site contains frequently updated news about the region, but the portfolio is administered centrally for cost and quality reasons.

Using CIThread, individual editors can now maintain literally dozens of these websites at once. The more the engine learns about their preferences, the more websites they can support. That’s one of the coolest features of inference engines: they get better the more you use them.

The technical brain behind CIThread is Mike Matchett, an MIT-educated developer with a background in computational linguistics and machine learning. The CEO is Tom Riddle (no relation to Lord Voldemort), a serial entrepreneur with a background in data communications, storage and enterprise software.

The two founders started out targeting professional editors, but I think the opportunity is much bigger. Nearly any company today can develop unique value for its constituents by delivering curated content. Using tools like CIThread, they can get very smart very fast.

If you want to hear more, e-mail curious@cithread.com or visit the website.