From Innovations, a website published by Ziff-Davis Enterprise from mid-2006 to mid-2009. Reprinted by permission.

Last week I spoke to a group of technology executives about the value of social networks. My presentation on this topic is full of optimism about the value that organizations can achieve from sharing the expertise of individual employees with internal and external constituents. The online knowledge maps that companies are now creating truly advance the cause of the corporate intranet.

Afterwards, a manager from a major technology company buttonholed me in the parking lot. His company is often held up as a shining example of progressive thinking in social media and it has made Web 2.0 a foundation of its marketing plans. In light of that, what this manager told me was a surprise.

His company was trying to encourage employees to blog and use an internal social network to share information and expertise fluidly amongst its huge global workforce. But it was being frustrated by the intransigence of many people, he said, particularly older employees. They simply refused to participate.

After overcoming my initial befuddlement, I realized that this situation is all too common in big companies. The reason senior employees resist sharing is because they believe it’s a threat to their job security. These people have spent many years developing their skills, and they fear that giving up that information may make them irrelevant at a time when companies are all too willing to replace expensive senior people with low-paid twentysomethings.

The politics of sharing

I was reminded of a recent conversation I had with a friend who works in the IT department at a venerable insurance company. She told me that the manager of that group instructed employees not to share information about the best practices the group was employing in managing projects. The manager feared that disclosing that information would threaten his value to the organization.

As troubling as these stories seem, the motivations behind people’s behavior is understandable. Few companies give much credence to the value of institutional memory any more. In fact, in today’s rapidly changing business climate, too much knowledge of the way things used to be done is often seen as a negative. But it’s really a negative only if people use memories as a way of avoiding change. Knowledge of a company’s culture and institutions is actually vital to the change process. Any CEO who’s been brought in from the outside to shake up a troubled company can tell you that his most valuable allies are the people who help navigate the rocky channels of organizational change.

My suggestion to this manager was to learn from social networks. In business communities like LinkedIn, people gain prestige and influence by demonstrating their knowledge. The more questions they answer and the more contacts they facilitate the greater their prestige. In social networks, this process is organic and motivated by personal ambition. Inside the four walls of a company, it needs some help.

As companies put internal knowledge networks into place, they need to take some cues from successful social media ventures.

- Voting mechanisms should be applied to let people rate the value of advice they receive from their colleagues.

- Those who actively share should be rewarded with financial incentives or just publicity in the company’s internal communications.

- Top executives should regularly celebrate the generosity of employees who share expertise.

- Top management should commit to insulating these people from layoffs and cutbacks.

Sharing expertise should enhance a person’s reputation and job security. Management needs to make the process a reward and not a risk.

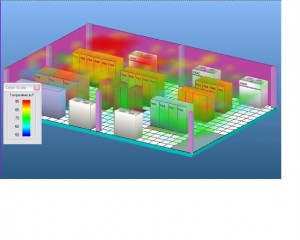

The graphic at right may look kind of cool, but it’s anything but. It’s actually a simulation of the heat distribution of a typical data center prepared by

The graphic at right may look kind of cool, but it’s anything but. It’s actually a simulation of the heat distribution of a typical data center prepared by  John Kao (right) believes the United States has an innovation crisis, and he’s calling on today’s corps of young technology professionals to sound the alarm.

John Kao (right) believes the United States has an innovation crisis, and he’s calling on today’s corps of young technology professionals to sound the alarm.