From Innovations, a website published by Ziff-Davis Enterprise from mid-2006 to mid-2009. Reprinted by permission.

Does the following scenario sound familiar to you? An internal customer has come to you with a problem. Her group is consistently missing deadlines because of poor communication. E-mails frequently go unread for days, group members don’t respond to questions in a timely fashion and too many meetings are required to get everybody back on track. The manager read in an airline magazine about wikis, and thinks they are the perfect solution. She wants you to get one up and running as quickly as possible.

What do you do? Experienced project managers will tell you that the last thing would be to install a Wiki. Better solutions may be available, and your job as an IT professional is to analyze the needs the manager has defined and identify the most appropriate solution.

Tony Ulwick and his team at Strategyn would tell you to take one more step back. They’d see all kinds of problems in the needs statement that was just presented. For example, words like “consistently,” ”frequently,” “timely” and “too many” are vague and subjective. Furthermore, the solution that the manager seeks — better performance against deadline — may be far short of the bigger goal of improving group performance. You need to define the problem better before tackling a solution.

Optimizing inputs

Strategyn specializes in helping companies optimize customer inputs to improve innovation. Ulwick, who has published widely on this topic, believes that most projects fail not because customers don’t understand the problem but because the people trying to solve the problem don’t ask the right questions. Strategyn’s methodology starts with helping stakeholders define needs very specifically so that vendors and internal service organizations can innovate from them. That means discarding adjectives and subjective statements, talking about jobs instead of outcomes and using very specific terms.

|

Tony Ulwick |

|

Lance Bettencourt |

Over the next two blog entries, I’ll present an interview with Tony Ulwick and Lance Bettencourt, a senior adviser at Strategyn. You can also find some helpful free white papers on this subject at the Strategyn website (you need to register to view them).

Q: You say businesses often respond to perceptions of customer need rather than actual defined needs. What are some governance principles you believe internal services organizations can embrace to address these needs? Is a structured approach to needs definition necessary?

Bettencourt: Businesses try to respond to too many customer needs because they don’t know which needs are most unmet, so they hedge their bets. An organization must have a clear understanding of what a need is. Without a clear understanding and a structured approach to needs definition, anything that the customer says can pass for a need.

Many organizations are trying to hit phantom needs targets because they include solutions and specifications in their needs statements, use vague quality descriptors and look for high-level benefits that provide little specific direction for innovation.

Customer needs should relate to the job the customer is trying to get done; they should not include a solution or features. They should not use ambiguous terms. They should be as specific and consistent as possible to what the customer is trying to achieve. It’s important that a diverse body of customers be included in this research because different needs are salient to different customers. The ultimate goal is to capture all possible needs.

Q: Your advice focuses a lot on terminology. At times, you recommendations are as much an English lesson as a prescription for innovation! Why the emphasis on terms?

Ulwick: What distinguishes a good need statement from a bad need statement is not proper English, but precision. If a so-called need statement includes a solution, for example, then it narrows the scope of innovation to something the customer is currently using rather than what the customer is trying to get done.

For example, if a need statement includes ambiguous words such as “reliable,” then it undermines innovation in multiple ways. Different customers won’t agree on what that means when, say, printing a document. Or they won’t agree on what “reliable” means in general. This leads to internal confusion and ultimately to solutions that may not address the actual unmet need. Customer need statements have to be precise and focused if you want to arrive at an innovative solution.

Q: You suggest focusing on the job. What’s the definition of “job” for these purposes?

Ulwick: We mean the goal the customer is trying to accomplish or the problem the customer is trying to solve. A job is an activity or a process, so we always start with an action verb when we’re creating a job statement. Positions can’t be jobs. In fact, there may be multiple distinct jobs associated with a given position. For innovation purposes, “job” is also not restricted to employees. We’re not just talking about people trying to get their work done, but all their daily activities.

The best way to get at a clear definition of the job is to begin with the innovation objectives of the organization or, in your example, the IT department. If the goal is to create new innovations in an area where there are already solutions in place, then the organization should understand what jobs the customer is trying to get done with current solutions. If the goal is to extend the product line into new areas, then the definition should begin by understanding what jobs the customer is trying to do in a different but adjacent space. Defining the job helps the organization to achieve its innovation objectives.

Next week, Tony Ulwick and Lance Bettencourt tells how development organizations can ask the right questions to assess customer needs.

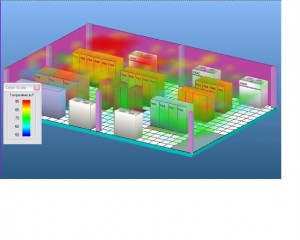

The graphic at right may look kind of cool, but it’s anything but. It’s actually a simulation of the heat distribution of a typical data center prepared by

The graphic at right may look kind of cool, but it’s anything but. It’s actually a simulation of the heat distribution of a typical data center prepared by  John Kao (right) believes the United States has an innovation crisis, and he’s calling on today’s corps of young technology professionals to sound the alarm.

John Kao (right) believes the United States has an innovation crisis, and he’s calling on today’s corps of young technology professionals to sound the alarm.